Published on 11/16/2016 | Technology

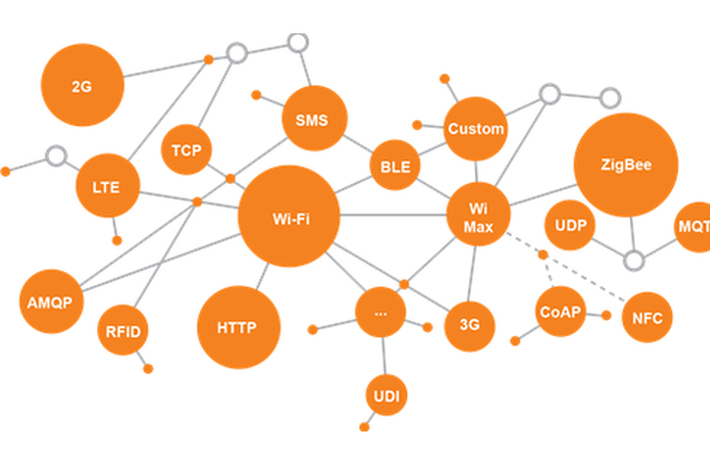

IoT sensors are generally embedded devices with a low memory footprint, limited battery power and with a specific shell life (durability) for the battery. from an IoT network architecture design perspective power efficiency of the protocol, range, node size, IoT-gateway downlink wireless bw/capacity et al become very critical to select the right wireless access technology for solving a particular IoT use case and customer problem. The following are some important parameters that have to be considered while selecting the wireless access technology (ZigBee, WIFI (.11ah, .11ax), RFID, ANT, 6LoWPAN, Z-Wave, NIKE, NFC, IrDA).

Protocol efficiency has a significant bearing on the amount of useful data that can be transferred from a single battery charge of the IOT Sensor.

An example calculation for BLE's protocol efficiency.

Preamble = 1 octet

Access Address = 4 octets

PDU (Protocol Data Unit (packet or message)) = 39 octets

Advertising Header = 1 octet

Payload length = 1 octet

Advertiser Address = 6 octets

Payload = 31 octets

CRC (Cyclic Redundancy Check) = 3 octets

BLE protocol efficiency: Payload/Total length = 31/47 = 0.66 > 66% efficient

Power efficiency is often queried by customers who are interested in prolonging the battery life of their devices, while still achieving good user experience (UX).

For example, when a mobile handset needs to synchronize email, the handset’s battery (with a fixed mAh) must last long enough to allow all emails (a fixed quantity) to be downloaded and read by the user. Which wireless technology on the handset would be most efficient? Wi-Fi or Cellular?

An Example Calculation for BLE's Power Efficiency.

Power consumption = 49uA x 3V = 0.147mW

Bytes per second = 20 x (1second / 500ms) x 3 channels = 120Bytes/second

Bits per second = 120 Bytes/second x 8 = 960 bits/second

Power per bit = 0.147mW / 960 = 0.153uW/bit

WIFI standard has evolved very well to solve the power constraint problem of the IoT sensors. As per the IEEE 802.11ah based WIFI-IOT sensors generally send the critical data to the nearby WIFI IOT-gateway and then go in sleep mode until their TWT (Target Wake Time) expires.

802.11ah enhancements for reducing power consumption: non-TIM operation, Target wake time (TWT) mechanism, Extended Sleeping and Listen interval,

Reliable packet transfer has a direct influence on battery life or user experience. Generally-speaking, if a data packet is un-deliverable due to suboptimal transmission environments, accidental interference from nearby radios, or deliberate frequency jamming, a transmitter will keep trying until the packet is successfully delivered. This comes at the expense of battery life. If a wireless system is restricted to a single channel, its reliability may deteriorate in congested environments.

A proven method to assist in overcoming interference is to use channel hopping, as implemented in Bluetooth. Channel hopping has been carried forward to LE. Bluetooth devices use Adaptive Frequency Hopping (AFH) which allows each node to map out frequently congested areas of the spectrum which can be avoided in future transactions.

ANT is specified to operate over 8 channels. However, it is often the case that sensor node chipsets only operate on a single channel. ANT employs a Time Division Multiplexing (TDM) system which increases reliability. A technique is used in an ANT network known as Bursting. Bursting uses the available spectrum aggressively and is known to block other ANT devices in the vicinity. ANT+ recognizes this and recommends file transfers are only conducted on a clear channel.

ZigBee implements a technique known as frequency agility (not hopping). A network node is able to scan for clear spectrum (CCA) and communicate its findings back to the ZigBee coordinator so that a new channel can be used across the network. While this method will work most of the time, it will not always be possible for the scan reports to migrate across the mesh under severe congestion/interference.

The range of a wireless technology is often thought of as being proportional to the Radio Frequency (RF) sensitivity of a receiver and the power of a transmitter. This is true to some extent. However, there are many other factors that affect the real range of wireless devices. For example, the environment, frequency of carrier, design layout, mechanics and coding schemes. For sensor applications, range can be an important factor. Range is usually stated for an ideal environment, but devices are often used in a congested spectrum and shielded environments. For example, Bluetooth is quoted as a 10 meter technology, but can struggle to provide a reliable Advanced Audio Distribution Profile (A2DP) stream from a pocket to headset, due to cross body shielding Similar problems can be observed in the health and fitness space IOT UseCases, where users have body mounted gadgets and move continuously. It’s worth noting that 2.4GHz is easily attenuated by human bodies.

Apart from the above mentioned parameters there are some more important parameters like cost of the IoT sensor to support a wireless access technology and latency which involves a combination of wireless access technology latency + IoT software stack are important for selecting a wireless access technology. for example, COAP is used at the Applcn layer in an IOT Stack because it is lightweight, UDP based with lesser overhead, COAP uses Datagram and still provides reliable delivery because it uses retries & re-ordering approach. Size of COAP Packets is small because it uses a Binary String Pattern unlike HTTP which uses a Dictionary String Pattern.

Other Factors

From a IoT network architecture perspective we need to also look at the node size, system capacity at the IoT edge of the NWK if the IoT sensors/nodes can be co-ordinated/self healed/SON by selecting a particular wireless access technology. This is also a critical aspect in selecting the right wireless access technology. For example WIFI provides an RRM based approach, Bluetooth provides secondary-master to pair up with just in case if the primary fails.

To sum it up, there is no one wireless access technology which can solve all IoT usecases, business problems in different IoT verticals. while selecting the wireless access t`echnology one has to go for a wise decision in making a tradeoff between all the above mentioned cost/economics, range, power efficiency, range, throughput required, node density, latency (soft real time or hard real time or mission critical system) and also see how seamlessly the new IoT network could be integrated with the existing network architecture.

This article was originally posted on LinkedIn.